Most peopleʼs first brush with AI is a chatbot that talks back or an app that paints pictures in seconds. The real story is unfolding in the world we live in, where machines donʼt just reply, they observe, analyze, and act. Whether itʼs cameras picking up on hidden patterns in crowds or systems keeping our streets,

workplaces, and waters safe, AI is becoming the connective tissue between digital intelligence and real world impact.

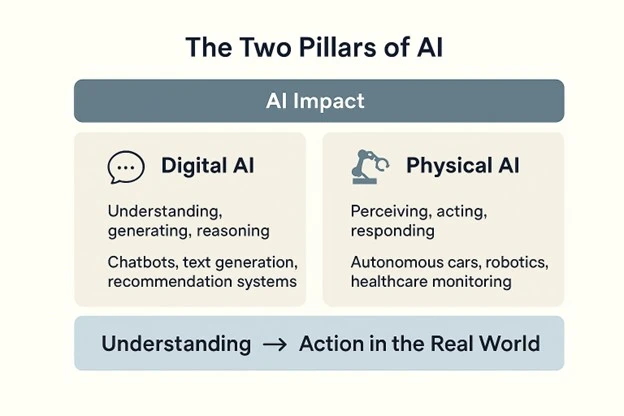

And this impact isnʼt one big picture, itʼs many. Digital AI paved the way for a new frontier: physical AI. While digital AI automates conversations and information, physical AI takes action in the real world, cars navigating streets, robots assisting in factories, and systems working alongside us to keep people safe.

Why Do We Need Physical AI?

Digital AI is powerful, but it lives on screens. It can draft a report, answer a question, or generate an image but it doesnʼt move through the same world we do. The challenges that shape our lives are physical: traffic that clogs our roads, accidents that happen in seconds, factories that must keep running without error, and lives that can be saved with faster response.

This is where physical AI steps in. By sensing, understanding, and acting in real environments, it can take on tasks that are too dangerous, too repetitive, or too complex for humans alone. Think of it as the bridge between data and action- the moment when intelligence stops being passive and starts making a difference we can actually feel.

Once we see why physical AI matters, the next question is simple: where is it already making a difference?

Domains of Impact

Once we understand why physical AI is needed, the next step is to see where it is already making an impact. Across industries and environments, intelligent

systems are moving from passive observation to active intervention.

1. On the Roads

Traffic is a problem of scale, millions of vehicles making billions of decisions every second. Physical AI here powers self-driving cars, adaptive traffic lights, and roadside cameras that donʼt just record incidents but respond to them in real time. Behind this are computer vision algorithms, LiDAR fusion, and deep learning models capable of tracking and predicting movement at human or even superhuman speeds.

How it works:

Road systems typically rely on object detection models like YOLO to identify vehicles and pedestrians, multi-object trackers to follow them across frames, and trajectory prediction networks to anticipate where theyʼll move next.

Together with LiDAR and radar, these components enable everything from

autonomous driving to traffic signal optimization.

2. At Work

Factories and warehouses are becoming hybrid environments where humans

and robots share the floor. AI-powered manipulators can identify objects, adapt to different shapes, and handle repetitive or hazardous tasks with precision.

Reinforcement learning and sensor fusion allow these systems to keep improving while staying safe around people.

How it works:

Robotic systems use 3D perception from cameras and depth sensors to understand their environment. Grasp detection models (GPD, GG-CNN) figure out the right way to pick up objects, while motion planning algorithms calculate safe and efficient paths. Over time, reinforcement learning helps them refine their skills in real-world conditions.

3. Public Spaces & Safety

Surveillance is no longer just about recording what happens, itʼs about

understanding it in real time. With physical AI, cameras can spot unusual patterns, catch early signs of accidents, or raise the alarm if someone is in distress in a crowd. Powered by video analysis models that learn how actions unfold over time, these systems can tell the difference between ordinary

activity and real danger, making faster intervention possible when it matters most.

How it works:

A common stack combines a detector (YOLO, DETR) to locate people, a

tracker (DeepSORT, ByteTrack) to maintain identities, and an action recognition model (SlowFast, X3D, 3D CNNs) to interpret behaviors over time. This lets systems not only see where people are but also understand what theyʼre doing.

4. Health & Care

In hospitals and homes, every second counts. AI-powered helpers are stepping in to monitor vitals, prevent falls, and support the elderly with everyday tasks.

By combining signals from cameras, sound, and health sensors, these systems are learning to move from simply reacting to problems toward predicting and preventing them before they happen.

How it works:

Wearables and sensors feed data into time-series models (like RNNs or

Transformers) that flag anomalies in heart rate, breathing, or movement. Pose estimation frameworks (OpenPose, MediaPipe) detect risky motion patterns such as falls. By fusing data from multiple modalities — video, audio, and vitals , these systems can shift from detection to prevention.

5. Everywhere Else

Physical AI isnʼt confined to roads, factories, or hospitals, itʼs spreading across agriculture, energy, retail, and even our homes. From drones that monitor crops, to smart grids balancing electricity, to checkout-free shopping powered by computer vision, the reach is vast. Many of these systems rely on the same underlying building blocks like sensors, vision models, and adaptive learning

but each domain brings its own twist. What they all share is the same

trajectory: turning raw data into decisions that play out in the real world.

How it works:

In agriculture, drone-mounted detectors (YOLOv8, Mask R-CNN) scan crops for stress or disease. In retail, multi-camera vision with re-identification models enables checkout-free experiences. In energy, anomaly detection algorithms forecast grid failures before they happen. The common pipeline is: sensors → detector/tracker → domain-specific AI S decision/action.

Challenges on the Road Ahead

AI in the real world is powerful, but itʼs not without challenges. The same systems that keep us safe or make life easier can also raise tough questions.

One big concern is fairness. If the data feeding an AI has biases, the decisions it makes might not be fair either. That means we need to keep an eye on how these systems learn and what they act on.

Another is trust. AI often makes choices in ways that arenʼt easy for people to understand. If a car swerves, or a system raises an alarm, we need to know why.

And finally, thereʼs the human side. As AI takes on more work, the real opportunity is not replacing people but finding the best ways for humans and machines to team up.

These challenges donʼt hold AI back , they guide us in shaping it responsibly.

Final Thoughts

AI is no longer confined to apps and screens, itʼs stepping into the real world, shaping how we move, work, heal, and stay safe. Its power lies not just in processing data, but in acting on it, often in ways that can save lives or transform daily routines.

Yet the real story of AI isnʼt only about smarter machines. Itʼs about smarter

choices. The more we build AI to be fair, transparent, and human-centered, the more it becomes a partner rather than just a tool.

The big picture, then, is clear: AI in the real world isnʼt the future arriving someday, itʼs already here. And how we guide it now will decide whether it simply changes our lives, or truly improves them.